European banks are pioneering privacy-compliant fraud detection systems using small language models (LLMs) and established AI techniques, delivering tangible benefits like reduced losses and fewer false positives while adhering to stringent EU regulations—positioning them ahead of US giants burdened by less unified privacy frameworks.

The Rise of AI in European Banking Fraud Detection

AI adoption for fraud detection has surged among European banks. According to the European Central Bank’s (ECB) 2025 AI workshops with 13 supervised banks across nine countries, 10 institutions—about 26% of significant institutions reporting production use cases—deploy AI for fraud detection.[1][2] These systems target diverse threats, including fraudulent transactions, account takeovers, identity fraud, and loan fraud, often using internally developed models with flexible sourcing from third-party providers.

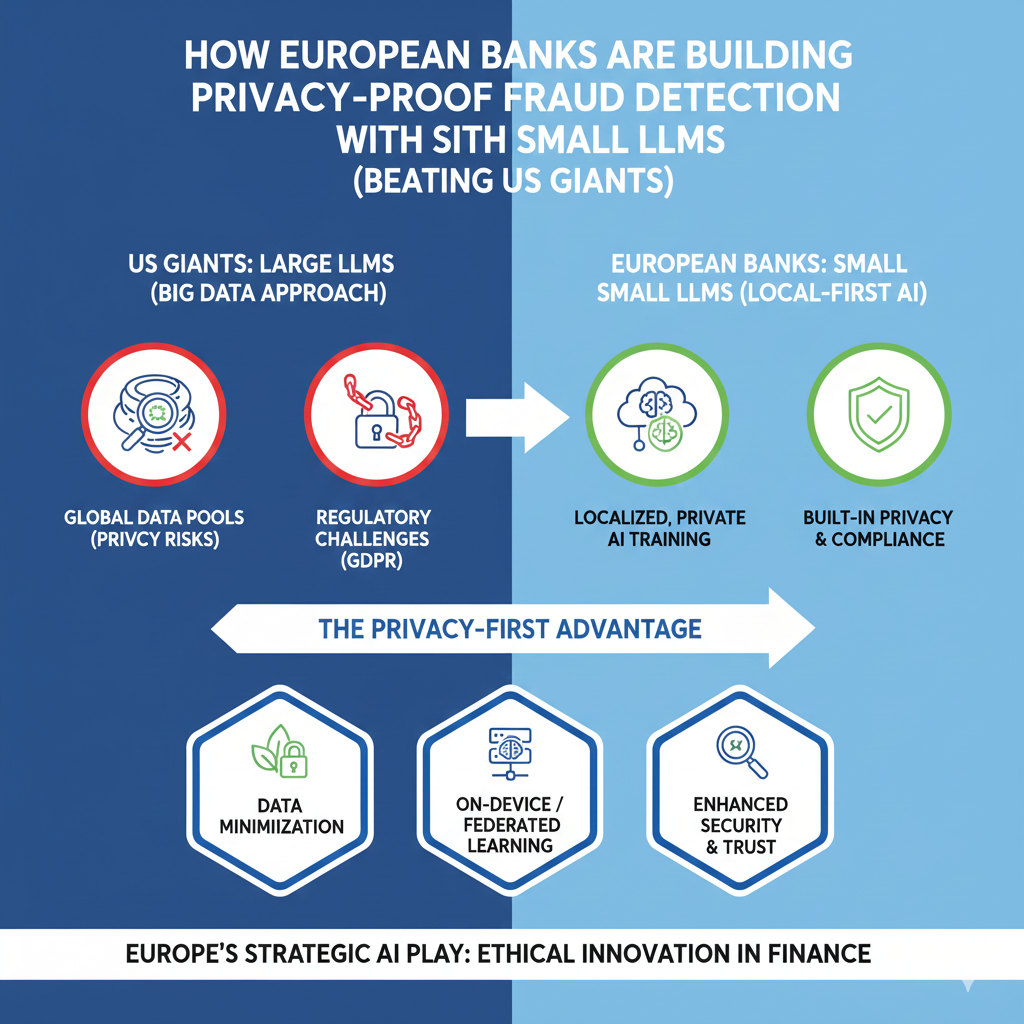

Unlike larger foundational models common in US tech-driven approaches, European banks favor smaller, efficient AI models such as decision tree-based algorithms and gradient boosting machines. Neural networks appear more in fraud detection than credit scoring, but no bank in the ECB sample permits post-deployment self-learning to ensure stability and auditability.[2] This conservative yet effective strategy emphasizes human oversight, with AI generating real-time alerts reviewed by fraud experts—a “human-in-the-loop” approach central to operations.

Privacy and Compliance: The EU AI Act Edge

Europe’s regulatory environment, particularly the EU AI Act, drives privacy-proof designs. Banks anticipate most fraud detection AI will classify as low-risk, requiring clear business cases, testing, explainability, oversight, and governance.[1] Emerging practices include self-hosted LLMs on private clouds or EU-based providers, ensuring data sovereignty and minimizing third-party risks like data privacy breaches.

Comprehensive compliance checks, backup options, and EU-centric providers address operational resilience. About half the sampled banks have dedicated AI policies or committees, with some appointing Chief AI Officers for accountability. This proactive stance contrasts with US banks, where fragmented state-level privacy laws (e.g., CCPA in California) complicate scalable deployments, often relying on massive cloud-hosted LLMs from providers like OpenAI or Google, raising cross-border data transfer concerns under GDPR equivalents.

Feedback loops between model reviewers and AI systems enhance accuracy without compromising privacy. Centralized dashboards and explainability tools quantify input contributions, though banks lack a unified definition of explainability—ranging from fully to partially explainable models.[1]

Real-World Benefits: Accuracy and Efficiency Gains

European banks report concrete advantages. AI boosts fraud detection accuracy, cutting losses and false positives—key metrics for operational efficiency.[1] Real-time pattern recognition stops fraud pre-emptively, minimizing manual reviews. In credit scoring parallels, AI enables precise risk assessments and lower defaults, but fraud detection shines in symbiosis with legacy tools for added assurance.

While specific quantification challenges persist, broader fintech trends underscore impact: AI-based fraud detection reduced losses by 40% for major platforms in recent years, a benefit European banks are replicating through tailored, privacy-focused implementations.[3] Regular model monitoring, expert reviews, and structured controls identify biases, with fallback procedures in development.

Small LLMs vs. US Giants: A Strategic Advantage

US giants like JPMorgan or Bank of America leverage massive LLMs for fraud, but scale introduces privacy hurdles. European banks’ small LLMs—optimized for edge deployment on private infrastructure—offer explainability and low latency without vast data centers, aligning with ECB priorities for 2026-2028 focusing on AI governance and risks.[2]

This approach beats US counterparts in regulatory agility. EU banks integrate AI into existing risk frameworks, using “golden” data sources and dual-role Chief Data/AI Officers. No self-learning post-deployment ensures predictability, vital under micro-prudential supervision. As fraud converges with new payments and regulations in 2026, Europe’s privacy-proof models position banks to modernize controls effectively.[4][5]

Challenges and Emerging Practices

Challenges remain: few automated validation tools exist, relying instead on expert oversight. External provider risks prompt EU-based sourcing and cybersecurity focus. Banks coexist AI with traditional models during transitions, enhancing explainability.

Looking to 2026, priorities include real-time adaptive security amid complex fintech infrastructures. ECB plans industry engagement and expert consultations to mitigate emerging risks, ensuring AI bolsters resilience without revolutionizing overnight—yet delivering superior, privacy-proof performance.

Conclusion

European banks’ use of small LLMs for fraud detection exemplifies balanced innovation: privacy-compliant, accurate, and human-overseen. This strategy not only meets EU AI Act demands but outperforms US giants in efficiency and compliance, heralding a new era of secure banking.

References

- https://www.bankingsupervision.europa.eu/ecb/pub/pdf/annex/ssm.nl251120_1_annex.en.pdf

- https://www.bankingsupervision.europa.eu/press/supervisory-newsletters/newsletter/2025/html/ssm.nl251120_1.en.html

- https://wezom.com/blog/fintech-development-trends-2026

- https://www.niceactimize.com/blog/fraud-in-2026-preparing-for-convergence

- https://thepaymentsassociation.org/article/2026-the-year-uk-banks-either-modernise-fraud-controls-or-lose-the-battle/